ML-ppt

ML-homework

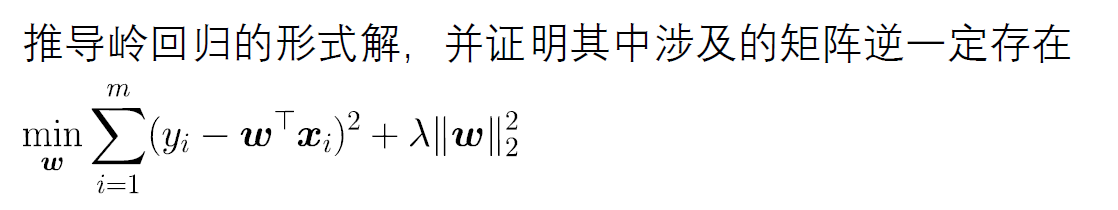

题目1

基础知识:

矩阵微分公式:

那么

题解:

补充:

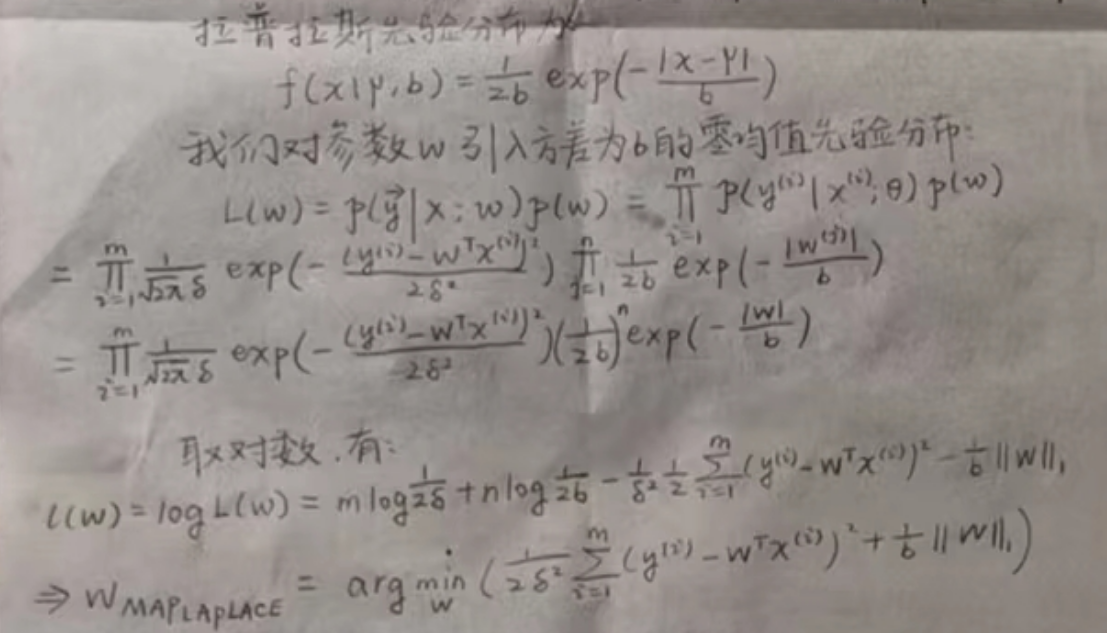

- 正则化可以看作是损失函数的惩罚项。

- 岭估计的参数模要严格小于最小二乘估计的参数模,即引入L2正则项后,参数长度变短了。这被叫做特征缩减。

- 特征缩减可以使得影响较小的特征系数衰减到0,只保留重要特征从而减少模型复杂度,减少过拟合。

题目2

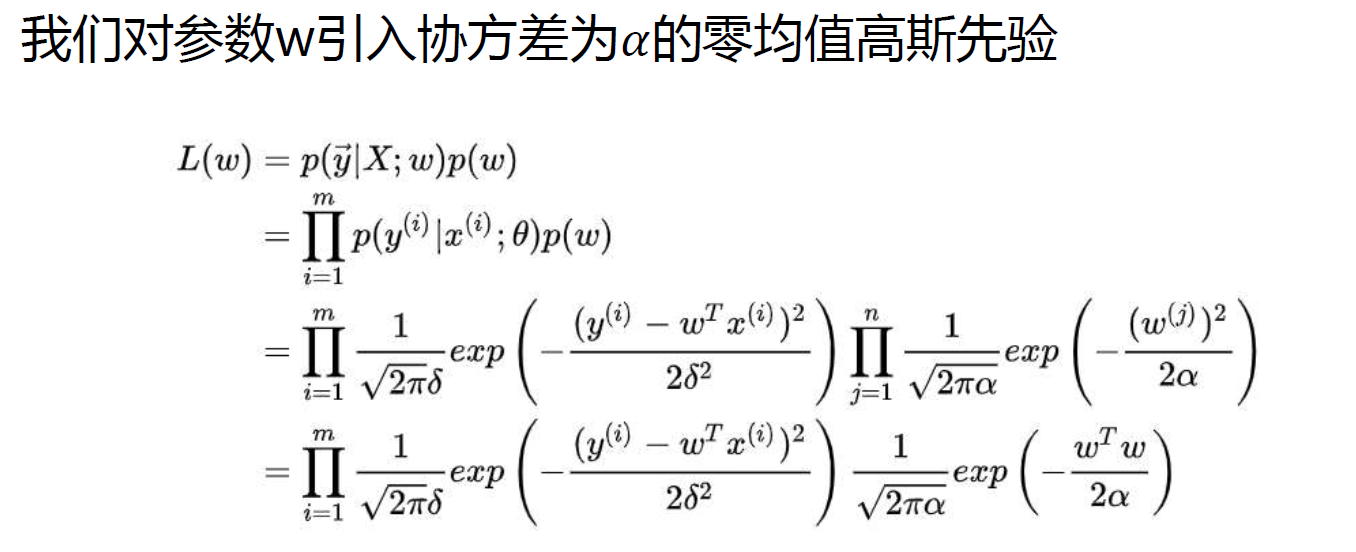

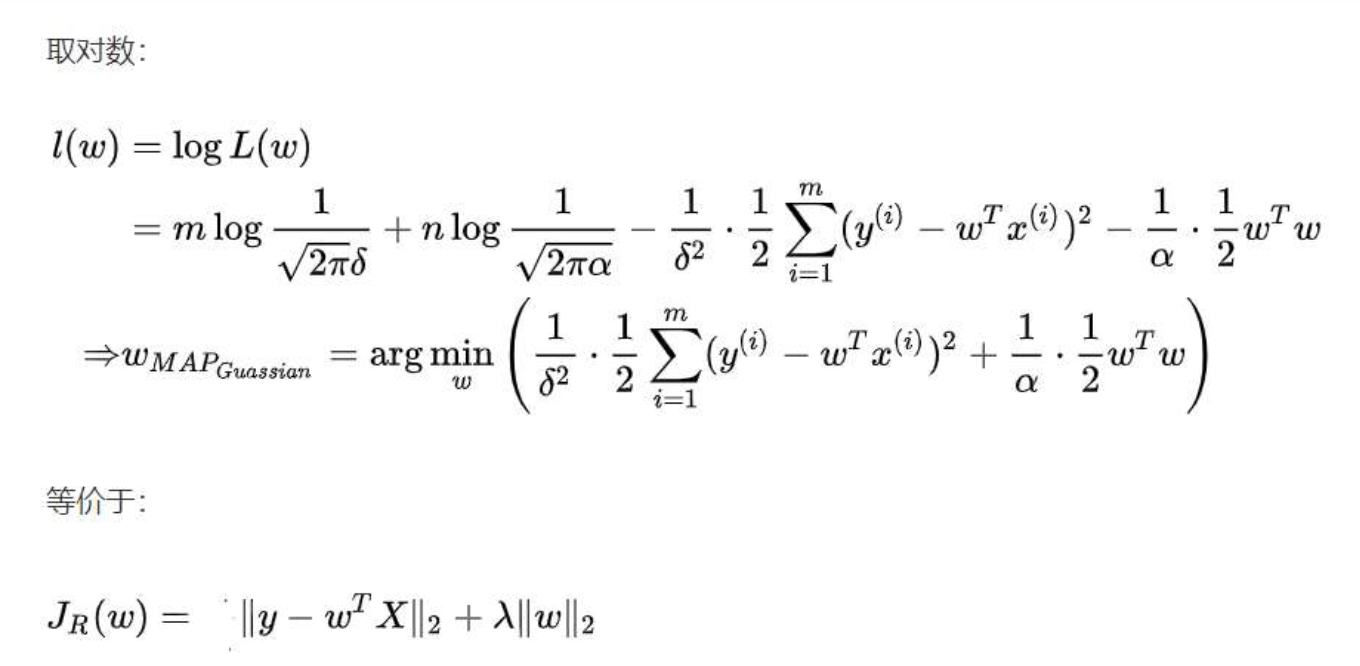

基础知识:

题解:

补充:

题目3

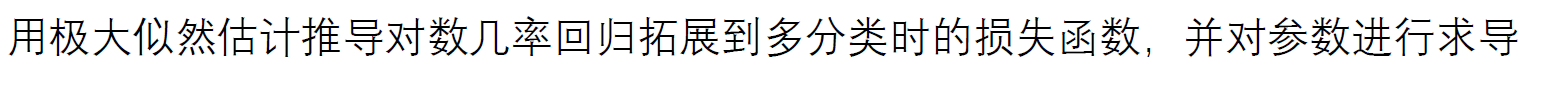

基础知识:

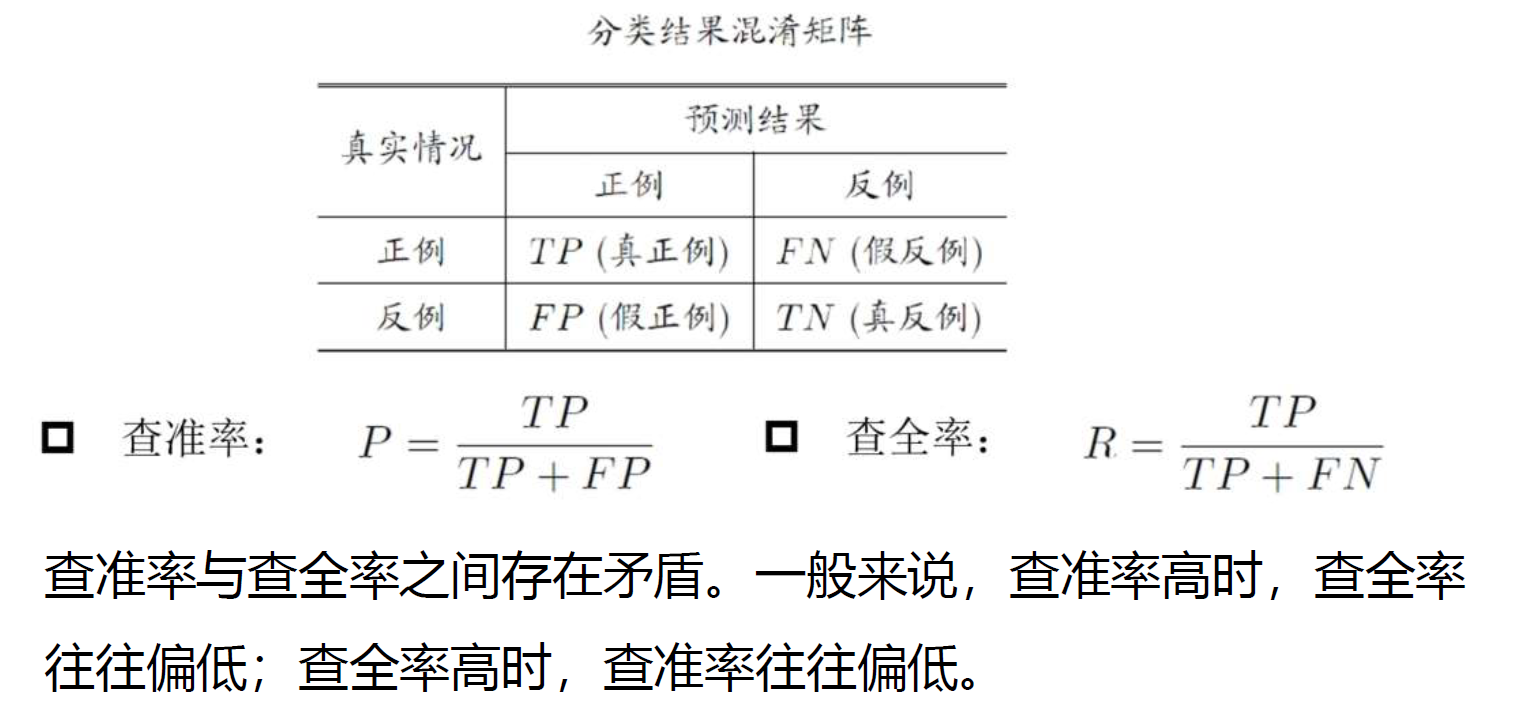

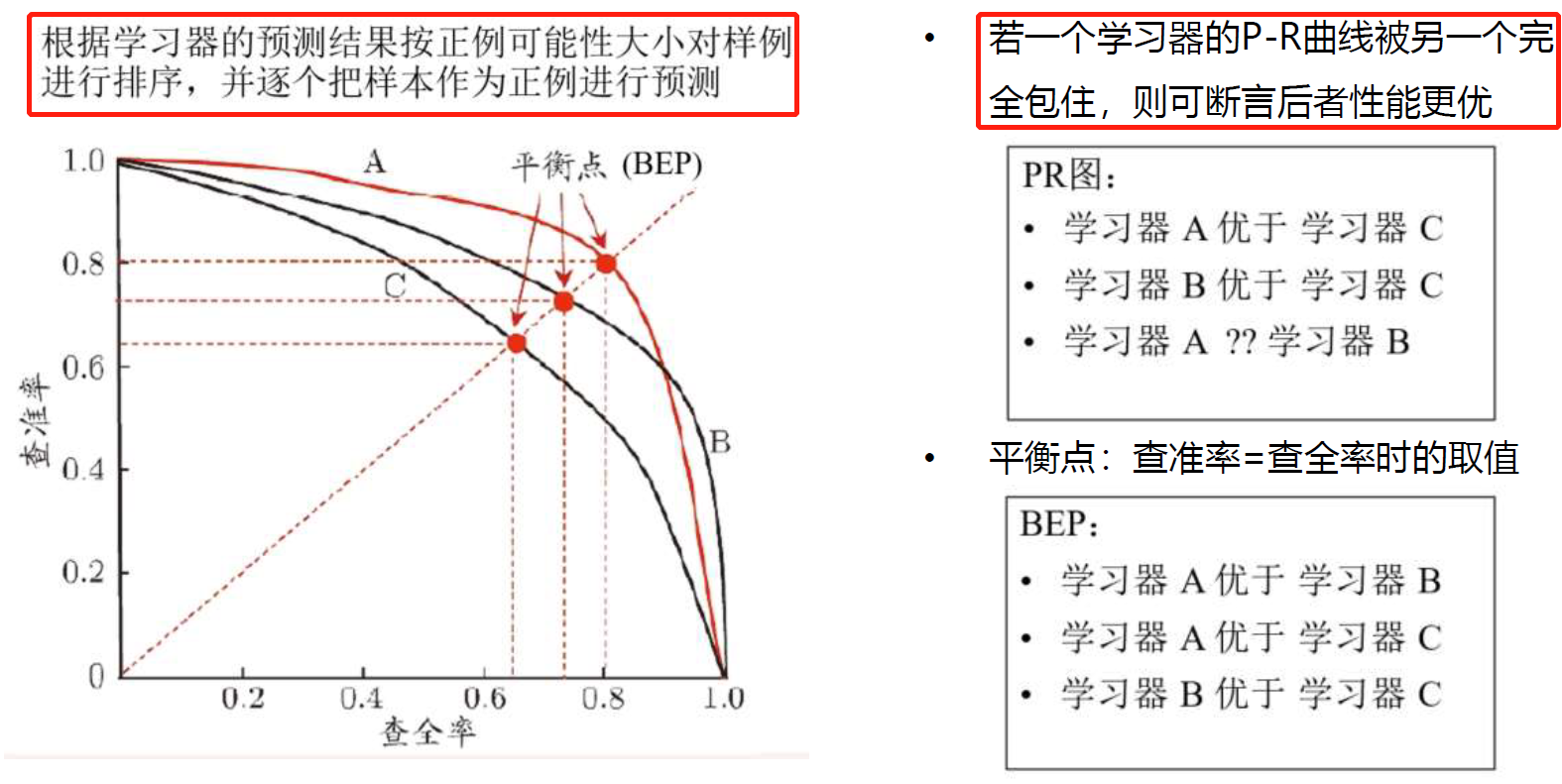

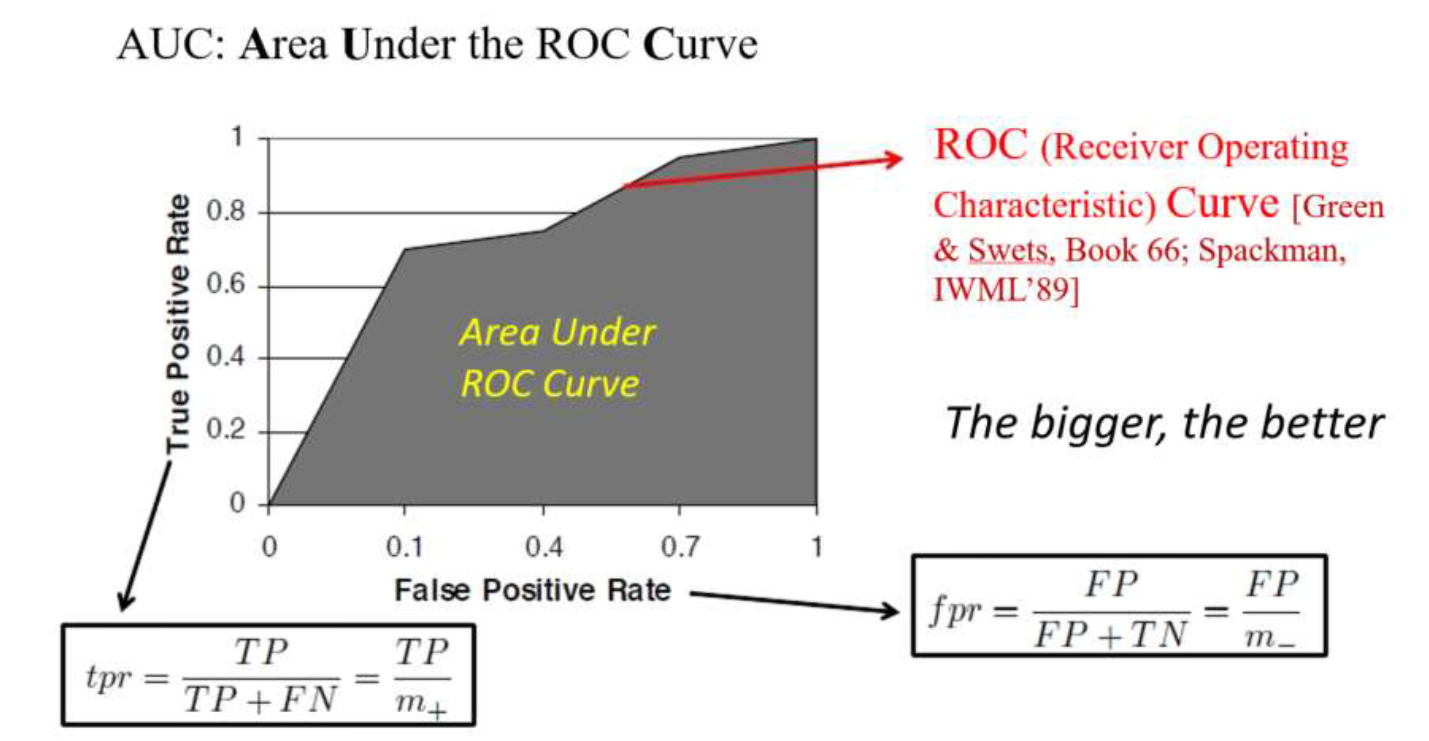

分类的评价指标:

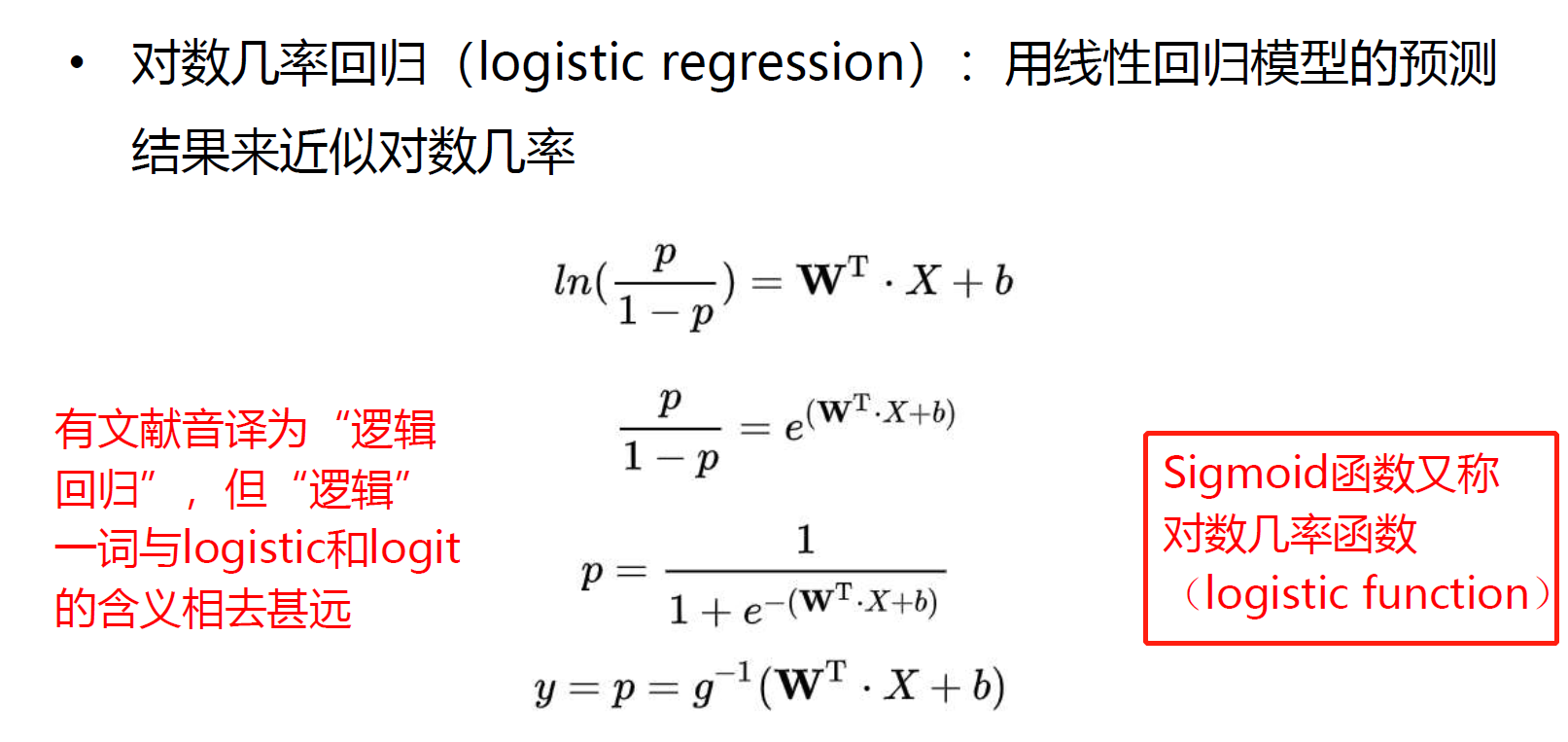

对数几率回归:用回归函数做分类任务。

题解:

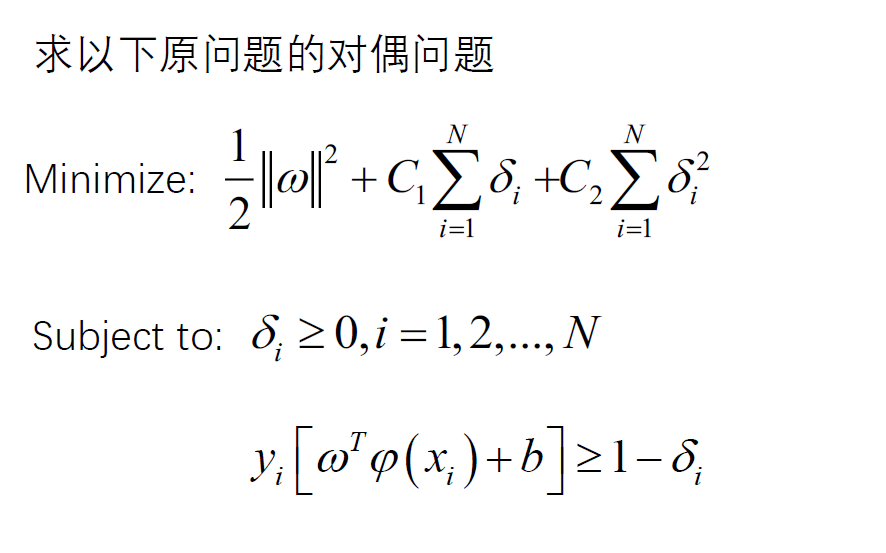

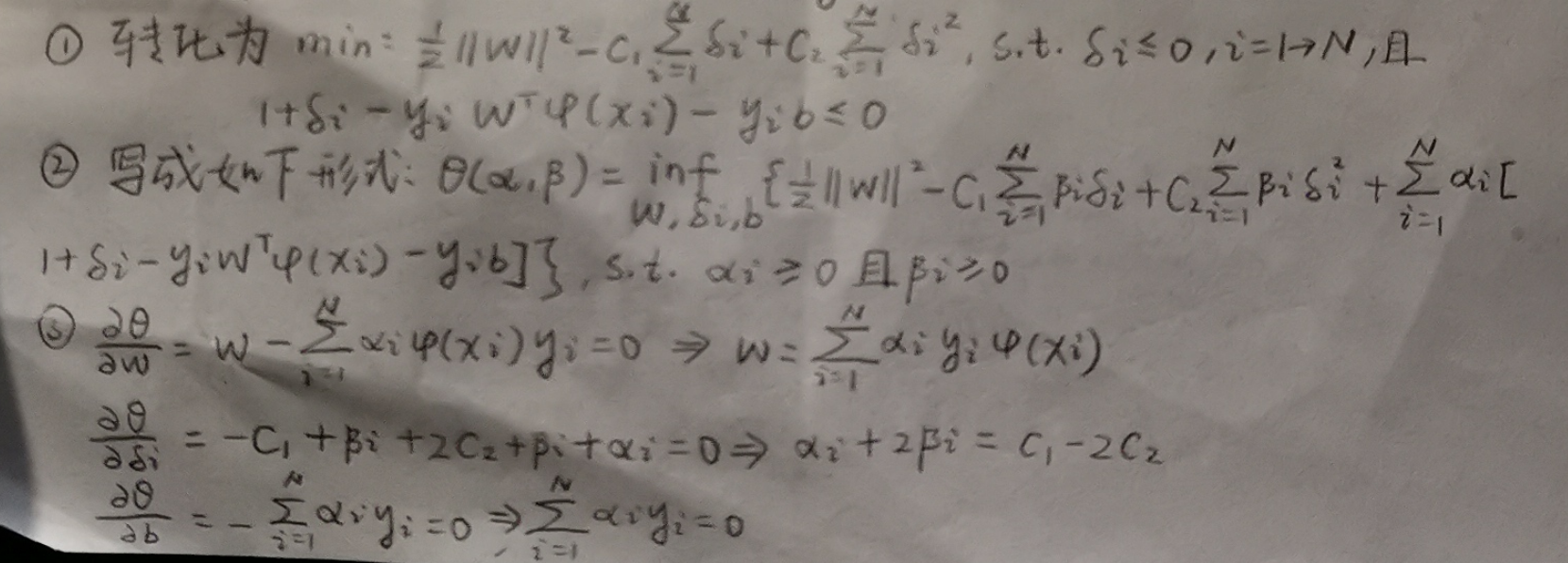

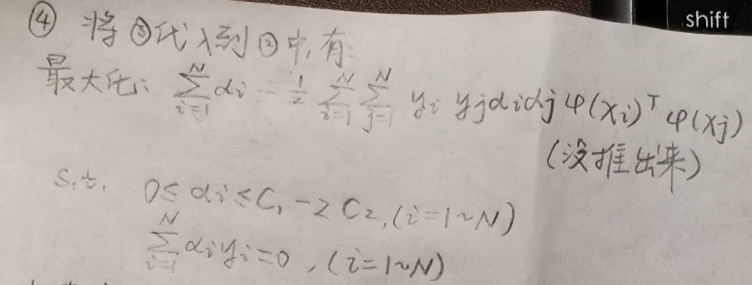

题目4

题解:

补充:

支持向量机的对偶问题

https://zhuanlan.zhihu.com/p/39592364

https://blog.csdn.net/weixin_40859436/article/details/80647547

https://www.jianshu.com/p/de882f0fc434

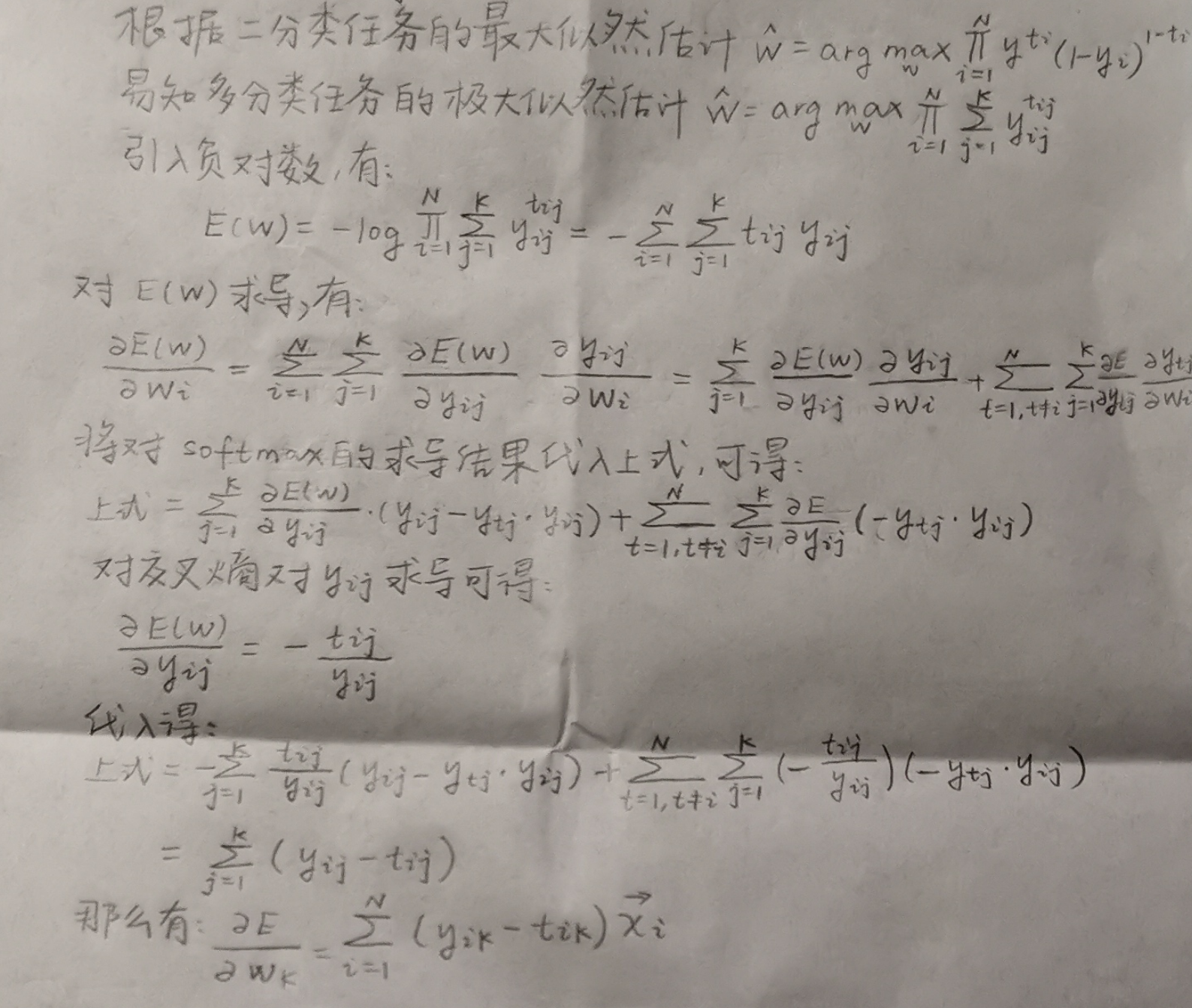

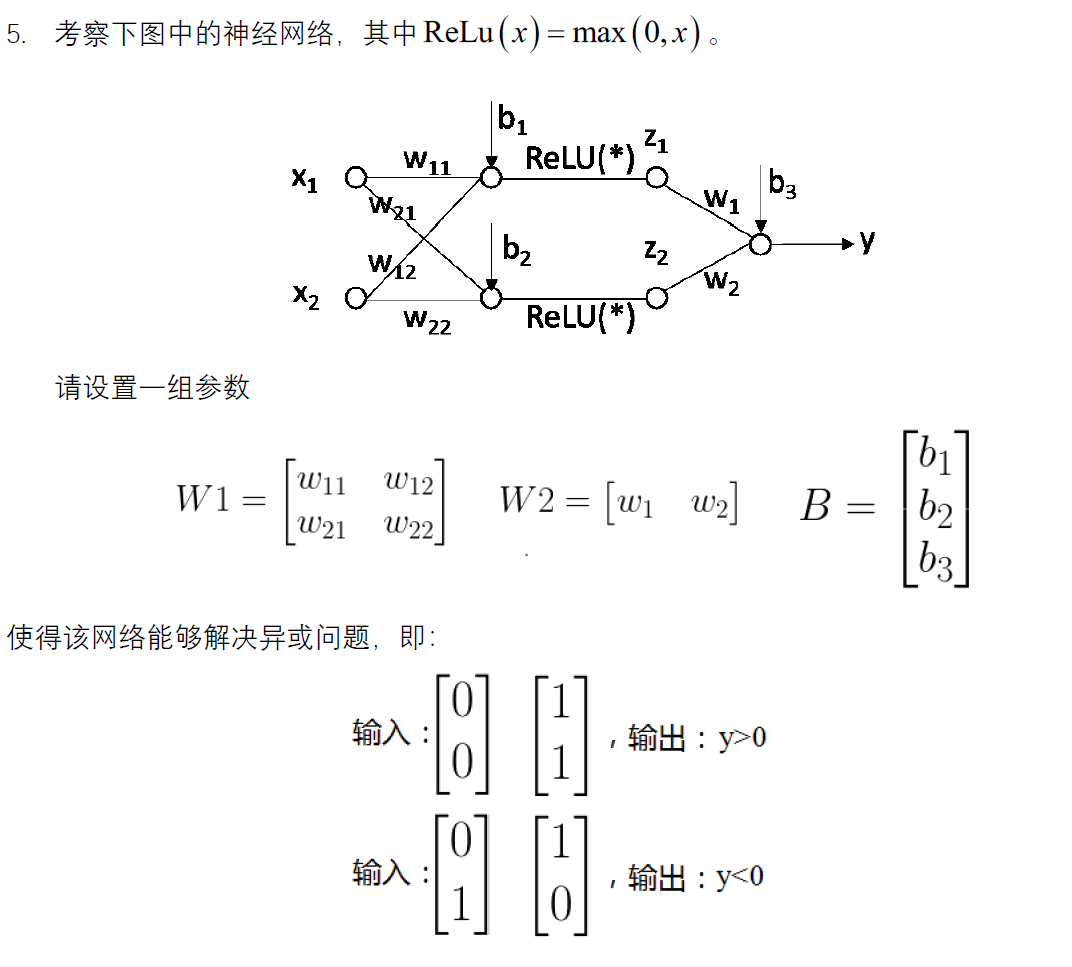

题目5

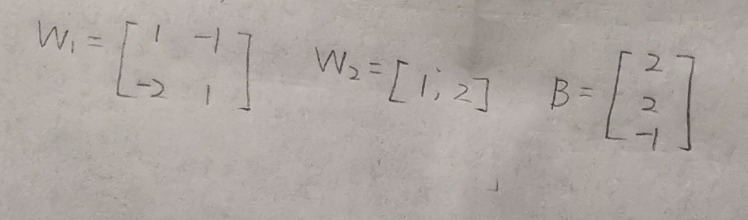

题解:

用了脚本:

1 | #!/usr/bin/env python |

最终有结果:

补充:

神经网络中的异或问题

留言

- 文章链接: https://wd-2711.tech/

- 版权声明: 本博客所有文章除特别声明外,均采用 CC BY-NC-ND 4.0 许可协议。转载请注明出处!